Taipei, September 4, 2024 - Innodisk announced its first CXL memory module (Compute Express Link) which can be used in AI-powered servers and cloud data centers.

But first, let's take a quick look at what CXL technology is and where it’s used.

Compute Express Link is a cache-coherent interface that improves the efficiency of computing systems, especially with accelerators.

The main goal of CXL is to separate memory and compute. Traditionally, memory has been tightly coupled to the CPU or GPU, which limits the ability to scale memory independently of compute. With CXL, memory can be separated from compute, pooled, and distributed across multiple compute nodes. This means that memory can be scaled independently of compute, allowing for more efficient resource utilization and improved performance. Another use case for CXL is to provide ultra-low latency data transfers between compute nodes. CXL's high-speed interconnect can be used to connect multiple servers or workstations, allowing them to communicate with each other with very low latency and shared memory, using a single pool.

CXL operates on three main protocols:

-

The CXL.io protocol is based on PCIe 5.0 (and PCIe 6.0 after CXL 3.0) - it is used for initialization, connection establishment, device discovery and listing, and register access using non-coherent loads/stores.

-

The CXL.cache protocol defines the interaction between a host (usually a CPU) and a device (such as a CXL memory module or accelerator). It allows attached CXL devices to cache the host's memory.

-

The CXL.memory/ CXL.mem protocol allows the host CPU to directly access the memory of attached devices, similar to how the CPU uses dedicated storage memory or graphics card/accelerator memory.

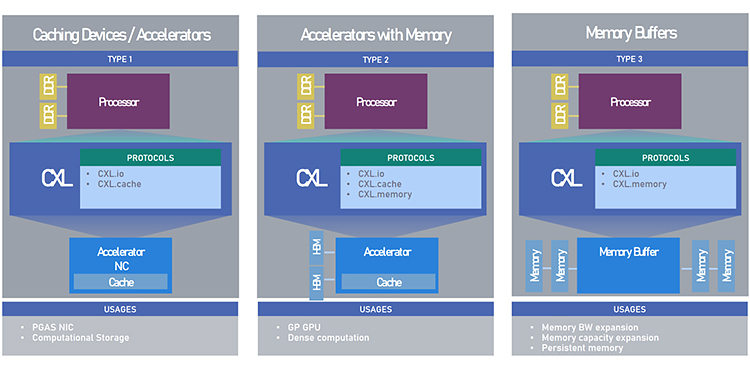

There are three types of CXL devices: Type 1 (CXL.io + CXL.cache), Type 2 (CXL.io + CXL.cache+CXL.mem) and Type 3 (CXL.io + CXL.mem). Support for the CXL.io protocol is considered mandatory for all types.

Type 1 can be thought of as a network card-type accelerator that has direct access to the host memory.

Type 2 is a graphics-type accelerator with its own memory, with the GPU having access to the host memory, and the host processor to the GPU memory.

Type 3 — the main purpose is to provide CXL memory space to the host processor.

CXL has several versions:

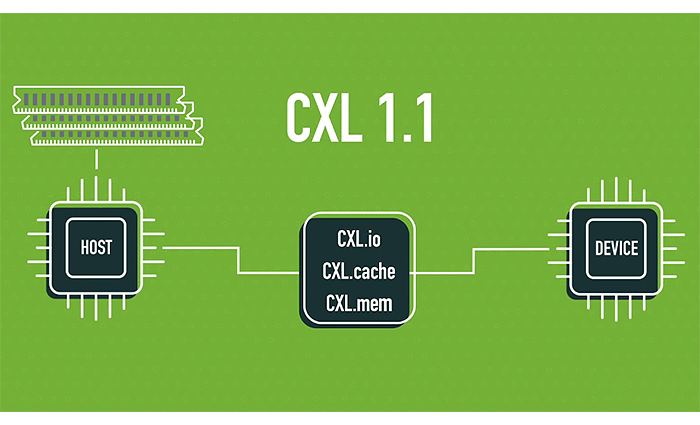

CXL 1.1. Works in system topologies under the control of the host processor, where CXL is embedded inside the system.

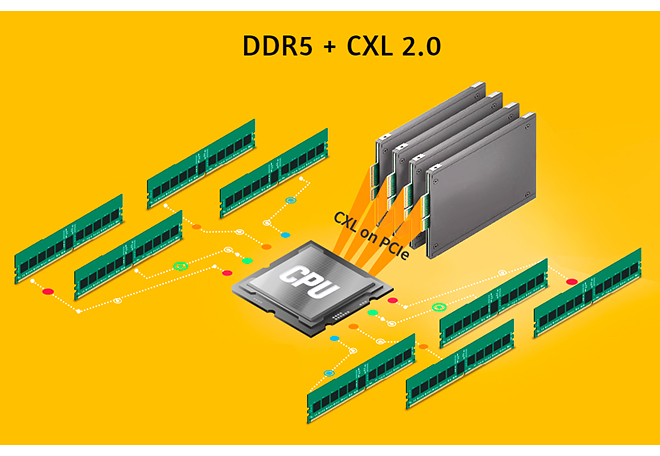

CXL 2.0 already gets the CXL switching option. The CXL switching and memory pooling feature allows multiple hosts and devices to connect to the switch, after which the devices can be "tied", either entirely or as virtual logical blocks, to different hosts. It is noted that if four CXL modules are installed in a server, each with 64 GB, and eight 128 GB DIMMs are carried on board, the total memory capacity can be increased by a quarter, and the total throughput by 40%.

It also adds multi-level switching, helping to implement device structures with non-tree topologies such as mesh, ring, or spline/leaf.

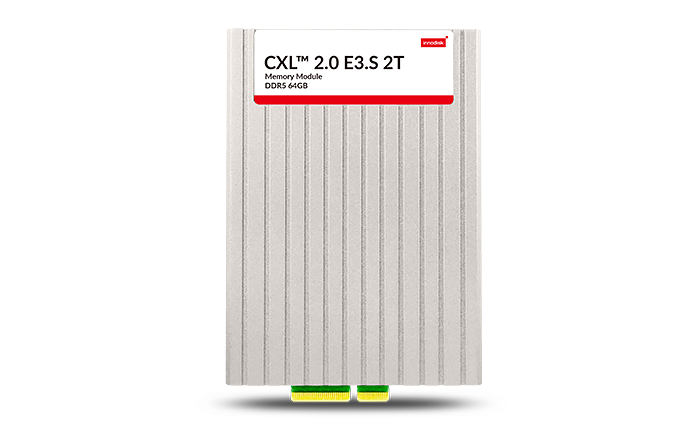

Innodisk CXL 2.0 ES.3 2T

When designing the CXL module Innodisk has tried to combine all the most modern technologies in one product.

CXL Innodisk uses Type 3 technology (CXL.mem & CXL.io) version 2.0

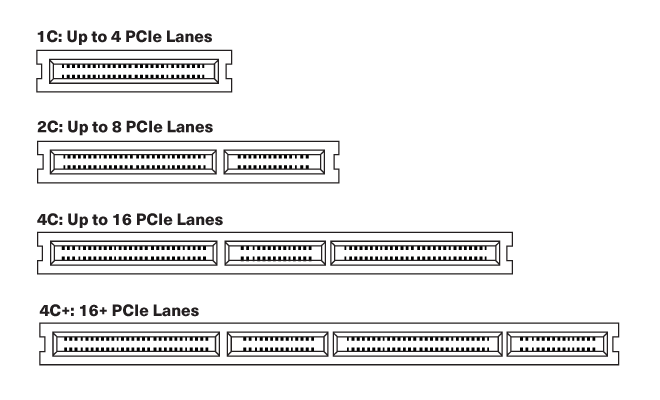

The device comes with a 2C connector (84 pin).

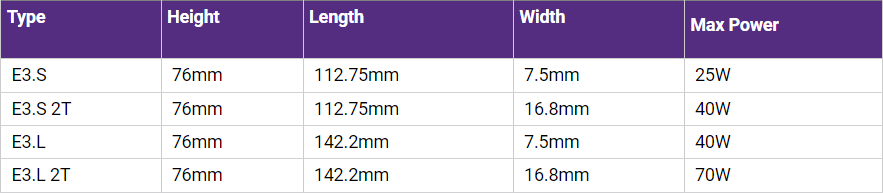

To implement the CXL interface, Innodisk integrated the E3.S 2T form factor based on the EDSFF standard into the memory module.

The main feature of this memory module is support for 32 GB/s bandwidth and data transfer speeds up to 32 GT/s via the PCIe Gen5 x8 interface, ensuring the fastest possible information processing.

The module capacity is 64 GB of the fifth generation (DDR5).

Operating temperature from 0 °C to 70 °C.

Shipments of this memory module are planned to begin in the first quarter of 2025.

For further technical information, inquiries about offers or placement of orders, please contact our sales team at sales@ipc2u.com

Find more Industrial RAM in our catalog.